Recently we have interacted with a few organizations on social media, and have been approached by several chatbots to start a customer service interaction.

Chat bots are autonomous programs that you may have encountered during a customer service experience, on your phone or online. They are software programs that either have a structured path of communication pre-engineered, or have AI that guides the “person” you are interacting with to help you with common tasks and requests. If you’ve interacted with a brand online, or on social media no doubt you’ve seen them.

Increasingly, companies are turning to these technologies to help automate customer service intake to answer routine questions, or guide behavior. Also increasing, is the frustration and anger that we feel as users when the “bot” doesn’t understand what we mean. And even worse, when the social engineering that is behind these chat bots’ messages is apparent, it leads to resentment.

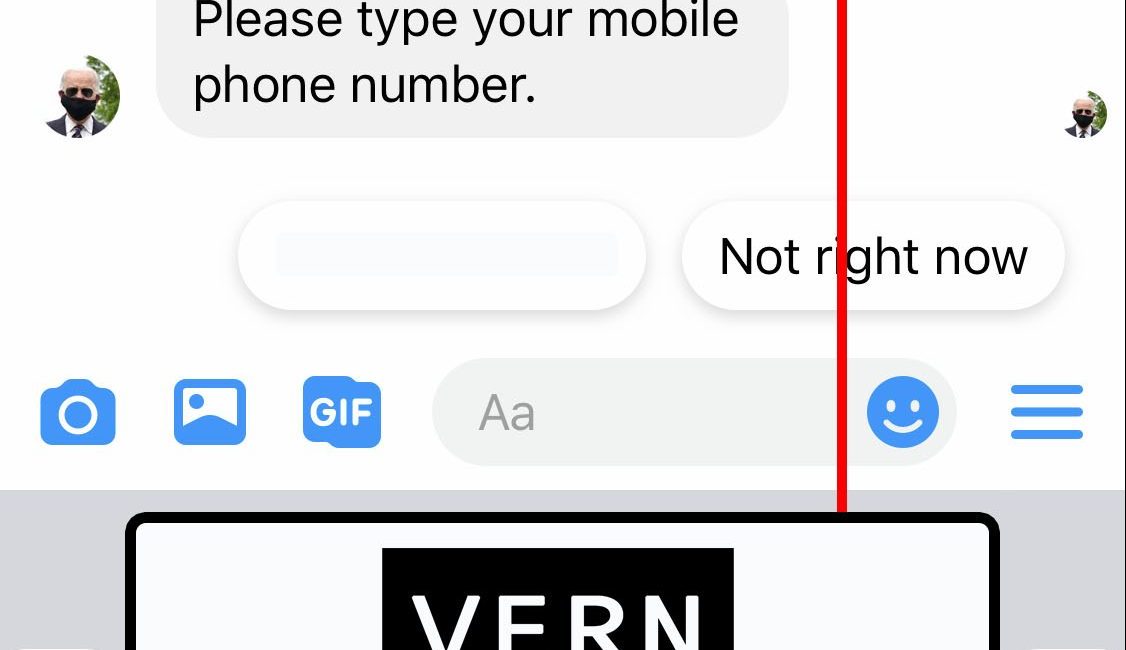

This recent exchange between a user (me) and the Biden campaign shows why emotional scoring is absolutely essential in interacting autonomously. We started with an interaction, which triggered an instant message through Facebook’s instant messenger program. The campaign asked routine questions to identify the user, and then asked for me to join their email list.

That’s when the fun began:

Like you all, I don’t want to join another never-ending email list where I get bombarded by emails constantly. It made me a little angry, and so I used sarcastic humor to “get back” at the bot.

I fired up V.E.R.N.’s humor detector and our brand-new anger detector and got the following score: 66% anger and 51% humor. That shows that there is an almost certainty of anger and a better than average change of humor. Which, any human can see is sarcasm.

But, the bot didn’t catch that. Instead, it is clueless to my building anger and resentment, and instead insists on moving forward to achieve its own goals. Bots have to get better, and emotional detection like V.E.R.N. will help.

Here’s what happened next:

“Fine….whatever” is a passive-aggressive statement, so V.E.R.N. detected it as anger. As with most passive aggressive statements, the anger is veiled and as expected shows up as moderately angry (51%).

This is what we all experience with chat bots, when they cannot understand us it feels like the bots don’t listen. And by extension, the user feels that the organization doesn’t listen. Doesn’t care enough to validate their feelings. Bots aren’t emotional, but boy we sure are! This invalidation of customers or communities is a very real thing, and is one of the largest complaints people have about interacting with companies.

Now, the bot wants to complete its goals so badly that it moves to accomplish them, despite my obvious objections:

As anyone would, I got frustrated. Then I made a joke about the notoriously low pay for campaign staffers (which have been replaced by an even lower cost alternative–chatbots). A human would likely have detected this as humor.

The bot? Not so much.

Instead of realizing that I was growing frustrated, the chat bot violated several covenants of customer service to get to its pre-programmed goal. Needless to say, this is a type of autonomy that has negative consequences on the impression of the organization. While this exchange is from the Biden campaign, there are thousands of chat bots everywhere that do not “get it” either.

V.E.R.N. was made to aid computers when they interact with humans. As you can see, this type of detection could have influenced a much better outcome to the message and its intent. I may have signed up, contributed, and shared…if I was understood better.

V.E.R.N. can be integrated with a chat bot, plugged into your robot’s logic, power your toy’s ability to interact with children, or help your marketing & advertising. It can help you determine how people would “take” a message, and what they mean “by” their message.

We’d love to see autonomous systems understand human interactions better. Let us know if we can help your system understand humanity.