Imagine you are on a date and you lean in for the first kiss, and the object of your affection says to you:

“I positive you so much! I negative without you. My life before you I felt so mixed, so neutral. Now I see positive all over the place!”

Sounds pretty absurd, doesn’t it? The first reaction one would have is thinking the other person must have just arrived on Earth. The feelings are in the right ballpark but the communication of the emotion just isn’t right.

Positive, negative, neutral and mixed don’t really tell you what you need to know. Sentiment analysis can only tell us which half of the world we live in. But if you’re looking for a city, state or even a nation or continent…that answer isn’t enough. Sure, you can see Jupiter with your naked eye but without a telescope you may mistake it for a star. The unit of measurement is too broad, or you’re using the wrong instrument. You might find the person is negative, but mistake their anger for sadness. Whoops…

Sentiment analysis tools are inaccurate

Even though heavy hitters in tech make billions of dollars selling sentiment analysis every year, there’s little difference between their tech and the open source technology on which they are based.¹ They’ll tell you they are accurate around or over 80% of the time. Which on its face seems great. But following this logic, diagnosing a patient as sick is as accurate as diagnosing someone with stage 4 pancreatic cancer.

In a customer service scenario, would you rather know if someone is negative; or what they actually feel negative about? Finding out that they aren’t really as ticked off by the wait in line; as they are by a minor feature of your product could have huge implications for your product design and marketing. Not to mention customer service and chatbots, that are designed to interact with a human being in real time. How should your chatbot respond with a 45% negative message from a customer? Would knowing how angry or sad they were, and knowing what in particular made them that way provide you with more actionable information? Does your CSR have extensive emotional intelligence training, and can understand when a customer makes a joke?

In a customer service scenario, would you rather know if someone is negative; or what they actually feel negative about? Finding out that they aren’t really as ticked off by the wait in line; as they are by a minor feature of your product could have huge implications for your product design and marketing. Not to mention customer service and chatbots, that are designed to interact with a human being in real time. How should your chatbot respond with a 45% negative message from a customer? Would knowing how angry or sad they were, and knowing what in particular made them that way provide you with more actionable information? Does your CSR have extensive emotional intelligence training, and can understand when a customer makes a joke?

In telehealth, learning a patient is “negative” doesn’t give you as much insight into their well-being as discovering they’re communicating sadness with even greater frequency. Does finding your patients respond “mixed” to your post-visit surveys give you anything of value?

In telehealth, learning a patient is “negative” doesn’t give you as much insight into their well-being as discovering they’re communicating sadness with even greater frequency. Does finding your patients respond “mixed” to your post-visit surveys give you anything of value?

Let me answer that question with another question: Does a clock with only AM/PM tell you enough about what time it is during your day? Sentiment analysis, like a broken clock, is only accurate twice a day…when the observation coincidentally aligns with reality.

So then why do we settle for emotional recognition that doesn’t tell us anything about the emotions present?

We don’t have to.

Meet V.E.R.N.

V.E.R.N. is a patented AI system that detects emotions in communication. It does so by analyzing the written word, or by converting audible speech to text. Our process reviews each sentence at a time, detecting latent emotional clues, or “emotives,” that are subconsciously present in our communication. We provide analysis in real time, without the need to pre-process or learn on a ton of data. You get the analysis back in milliseconds telling you a confidence level between 0-100 of each emotion we detect. In fact, you can get it installed and start analyzing emotions in minutes.

V.E.R.N. is a patented AI system that detects emotions in communication. It does so by analyzing the written word, or by converting audible speech to text. Our process reviews each sentence at a time, detecting latent emotional clues, or “emotives,” that are subconsciously present in our communication. We provide analysis in real time, without the need to pre-process or learn on a ton of data. You get the analysis back in milliseconds telling you a confidence level between 0-100 of each emotion we detect. In fact, you can get it installed and start analyzing emotions in minutes.

We created V.E.R.N. to solve the problems with sentiment analysis, and to provide a real time solution for analyzing emotions. For AI and robotics to evolve, this step of understanding emotions is absolutely critical for the automation revolution to continue. We’re not the only ones who think so.²

How V.E.R.N. works

V.E.R.N. is designed to be like a human receiver akin to the transactional model of human communication. In this theoretical framework, there is a sender of information, a medium in which the information travels, noise, and a receiver. A sender encodes the message to impart information, transmits it via a medium, and the receiver decodes the messages and reacts. Anywhere there wasn’t a clear understanding to that information, we call that noise. Noise can be a physical infringement, like a hum from electromagnetic interference. Noise can also be the misunderstanding of the sender’s message; either due to a lack of knowledge or failure to understand the frame of reference of the sender.

V.E.R.N. is designed to be like a human receiver akin to the transactional model of human communication. In this theoretical framework, there is a sender of information, a medium in which the information travels, noise, and a receiver. A sender encodes the message to impart information, transmits it via a medium, and the receiver decodes the messages and reacts. Anywhere there wasn’t a clear understanding to that information, we call that noise. Noise can be a physical infringement, like a hum from electromagnetic interference. Noise can also be the misunderstanding of the sender’s message; either due to a lack of knowledge or failure to understand the frame of reference of the sender.

Other systems and products have taken a much more complicated approach–including recreating the brain (think vast neural networks) instead of focusing on the process that underlies the phenomena. This unnecessarily complicates the process and ads tremendous time and financial costs. Here’s an example:

“Life is like a box of chocolates, they both end sooner for fat people.”

Just think the highest level of logic necessary to write that…

You’d need to have your program know a lot about a box of chocolates. You’d have to program it to figure out that there was a finite amount of candies in a box. You’ll have to add knowledge that people can become overweight by eating too many sweets…and the likelihood of the box lasting very long not being that high. OH AND knowledge that this choice makes people obese, and that obese people die sooner than other people.

Whew! That’s a lot of logic.

That requires a sophisticated set of algorithms, mathematics, probabilities, and a never ending set of “proper” data on which to train. And, you only got the computer to detect one joke for the effort. Incidentally, V.E.R.N. scores that sentence as 66% confidence of humor in the message. So it’s already a lot more accurate. Sentiment analysis tools rate that sentence falling between 80-100% confidence of it being a positive sentence. As a big guy myself, there’s nothing “positive” about that sentence. It’s humor, even though it may offend me.

So instead of hiring all the computer scientists and engineers in this year’s class, spending hundreds of millions of dollars, we took a markedly different approach. We aren’t trying to be the brain. Only the ear-tuned to find emotions. Like a person would in real life.

But can you really detect emotions by using only the words people use?

Yeah. And you do too. Everyday.

The words are labels for the physical world and how we interact with it. In essence, we’re following the same methods that advanced machine learning computer systems follow in that we learn via classical and operant conditioning. Sometimes that learning is supervised and sometimes it is only partially–and sometimes completely on our own (unsupervised). Only, we’re doing it naturally all the time and have since birth.

We believe that words are the best way to detect emotions in communications. And we’re not the only ones.³ Several researchers found that written words contain a lot of information about the emotional state of the sender. The sender takes additional time to cognitively form and translate thoughts into words with the express purpose of communicating the information; therefore it is a better representation of intent than other forms of communication, say non-verbal ones such as body language and tone.

We believe that words are the best way to detect emotions in communications. And we’re not the only ones.³ Several researchers found that written words contain a lot of information about the emotional state of the sender. The sender takes additional time to cognitively form and translate thoughts into words with the express purpose of communicating the information; therefore it is a better representation of intent than other forms of communication, say non-verbal ones such as body language and tone.

Still not convinced? Here’s a thought exercise you can try right now:

Concoct a story about the first time you fell in love using only facial expressions. Or, try to tell someone what you’re angry about using only tones and grunts and vocalizations.

Spoiler alert: You can’t.

Without analysis of the words in communication, you’re missing the message completely. It’s like eating the sesame seeds off of a bun and claiming that only the sesame seeds make the burger. Which is nonsense. We all know that. Yet, some organizations try to convince you that you can and eagerly take your money.

Some claim you can only truly understand emotions by facial expressions. While there is some science behind universal expressions across cultures, you can’t tell a story only with facial expressions. (See above).

And, using facial recognition is fraught with bias and error. There are reasons these systems have been retracted from the market . In some cases, they were trained on biased data. In other cases, the technological method of comparing contrasting pixels is problematic with darker skin tones, rendering it biased. Both are reasons that this technology has been removed from the market.

We went to a convention (B.C.-Before COVID), and had the opportunity to try facial and emotional recognition in state-of-the-art technologies in Silicon Valley. I stood there and told my colleague in angry, vulgar ways how much I despised him and wanted to commit egregious acts of violence on him and his family. I did so all while staring into the camera. I did so without changing my expression…so with a “poker face.”

What did these systems capture? Nothing. (Although they did guess I was about 8 years younger than I am on average, so that was cool. And of course I was only saying these mean things to my colleague to prove a point).

Some claim that only by analyzing the tone in the message from an audio source can you truly understand emotions. Which would be great, but we also understand written communication. So right there, the logic breaks down in their argument. Granted, tonality can shift the interpretation of the receiver–and is done so intentionally by the sender–but it is not mutually exclusive. It needs the words to work.

Need the words to work

Let’s take a look at a real world example. First, I will provide you with a real-world review of a business. It will then be followed by sentiment analysis from one of the largest tech companies in the world, including how they scored the corpus. Then, we will provide you with what V.E.R.N. analyzed in the same review. In doing so, we hope you’ll see what value V.E.R.N. could have in your organization.

First the review:

“I just wanted to find some really cool new places I’ve never visited before but no luck here. Some of these suggestions are just terrible…I had to laugh! Most suggestions were just your typical big cities, restaurants and bars. Nothing off the beaten path here. I don’t want to go these places for fun. Totally not worth getting this!”

And now the analysis of the corpus:

Competitor’s score

NEGATIVE: 0.72

MIXED: 0.17

POSITIVE: 0.06

NEUTRAL: 0.05

Yeah, it’s mostly negative. Sure. That’s cool. It tells you the customer wasn’t happy, and that gives you something to work with. But wouldn’t you like to know what in particular they may be upset with, or are really joking about?

V.E.R.N.’s score

“I just wanted to find some really cool new places I’ve never visited before but no luck here.” (51% anger, 90% humor)

Okay now we’re talking! They’re a little angry, “miffed” maybe? Certainly not happy that their goals weren’t met. And, they’re using some humor too, so that could tell you that this person is using sarcasm.

“Some of these suggestions are just terrible…”(33% anger, 33% humor)

Hmm. Looks like a little anger and humor, but seems like it’s more of a declarative sentence of fact based on the individual’s opinion.

“I had to laugh!” (51% anger, 12.5% humor)

Yeah, this person isn’t joking…they’re more angry now. But why?

“Most suggestions were just your typical big cities, restaurants and bars.” (12.5% anger, 51% humor)

Not enough of an anger signal, but it is picking up a borderline humor signal. Perhaps the customer is showing a sarcastic disdain for the suggestions?

“Nothing off the beaten path here. (33% anger, 0% humor) I don’t want to go these places for fun. (12.5% anger, 33% humor) Totally not worth getting this! (33% anger, 0% humor)”

These are statements of fact. The reviewer doesn’t want to go to these places for fun, they prefer things that are less popular and therefore the product wasn’t worth getting.

In the V.E.R.N. analysis, you could see wherein the problem lies. It gives you more actionable information to base your response. And, if you understand their emotions as a receiver, you are perceived as “empathizing” with them. It can mean the difference between replying back to the customer with a boiler plate apology, or serving their needs and turning a frown into a smile or a bad review into a repeat customer.

And it’s not just limited to product reviews. Consider:

“When you lose a person you love so much, surviving the loss is difficult.”

This sentence to a human being is clearly negative, sad and possibly depressed. We feel empathy towards the sender since we understand that the closer you are to someone, the greater the feeling of loss when that someone dies. Like most sentences in human communication, there is a good deal of nuance.

Another sentiment analysis tool scored this sentence as: 46.6% positive, 13.6% neutral and 5.4% negative. V.E.R.N. scores that as: 12.5% anger, 12.5% humor and 51% sadness. Indeed, we can see how V.E.R.N.’s analysis can provide more value to the receiver…and to your business, project or product.

V.E.R.N. is real time

We created the software to respond in real time to real time communications. It works right out of the box, without having to spend weeks or months training the model on your legacy data. (Data which would have to be externally valid, or would skew the interpretations and render the analysis moot). It works in milliseconds, allowing you to integrate it into your stack without latency.

That way, your chatbots can analyze and react in real time too. Because V.E.R.N. is so quick, it really gives the end user the analysis just as it’s finished being delivered. So your chatbot can recognize a joke instantly, before a person even could, and increase the user’s affinity towards it by responding appropriately. So your customer service representative can tell if they’re a little put off, or are REALLY angry and need to expedite the situation to a manager. Or if it was just a joke…

That way, your chatbots can analyze and react in real time too. Because V.E.R.N. is so quick, it really gives the end user the analysis just as it’s finished being delivered. So your chatbot can recognize a joke instantly, before a person even could, and increase the user’s affinity towards it by responding appropriately. So your customer service representative can tell if they’re a little put off, or are REALLY angry and need to expedite the situation to a manager. Or if it was just a joke…

It’s real time so you can track how people are reacting to your new product. Or candidacy. Or how they might feel about a new movie you want to produce.

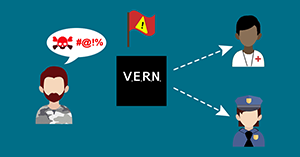

Or in order to track threats and risk.

We created V.E.R.N. to help solve these problems and open up a new frontier in emotion detection. We’ve been working through the science and engineering necessary to provide a system that’s great out of the box–and only gets better the more its used. In real time, for a real time world.

And it’s performing as expected: In our internal testing, and so far in real world applications, we’re above 80% accuracy finding emotions. Not nuke a city to get a fly accurate, but granular so that there can be some value to the analysis. Like catching the fly with chopsticks.

If you’d like some more information on V.E.R.N., please contact us today

Email us today

Call us on the phone

References:

1. ” Measuring Service Encounter Satisfaction with Customer Service Chatbots using Sentiment Analysis,” Feine, Moranna, Gnewuch, 2019. 14th International Conference on Wirtschaftsinformatik, February 24-27, 2019, Siegen, Germany

2. “Westworld isn’t happening anytime soon because AI can barely even recognize human emotion,” (https://apple.news/AgtvMIlinTga0HyHWzisNoA); “What makes us laugh is on the frontier between humans and machines. (https://newsroom.cisco.com/feature-content?type=webcontent&articleId=1938500); Making AI more emotional is essential to progress in AI universally (https://www.forbes.com/sites/charlestowersclark/2019/01/28/making-ai-more-emotional-part-one/#7bbc354c5fc1)

3. Tausczik, Y.R., Pennebaker, J.W.: The Psychological Meaning of Words: LIWC and Computerized Text Analysis Methods. Journal of Language and Social Psychology 29, 24–54 (2010); Nerbonne, J.: The Secret Life of Pronouns. What Our Words Say About Us. Literary and Linguistic Computing 29, 139–142 (2014); Küster, D., Kappas, A.: Measuring Emotions Online: Expression and Physiology. In: Holyst, J.A. (ed.) Cyberemotions: Collective Emotions in Cyberspace, pp. 71–93. Springer International Publishing, Cham (2017)

[…] V.E.R.N. AI is a patented emotion recognition software designed to analyze communication for emotions in real time. Read more […]